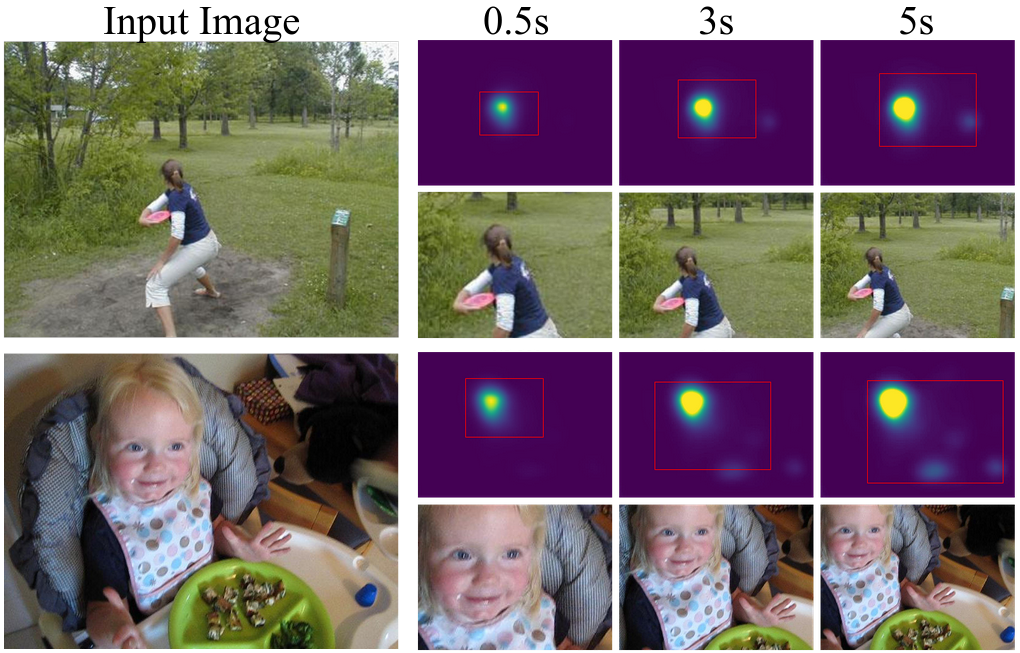

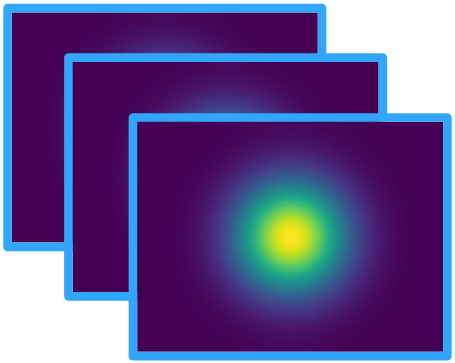

Example multiduration predictions from our Multi-Duration Saliency Excited Model (MD-SEM).

Dataset

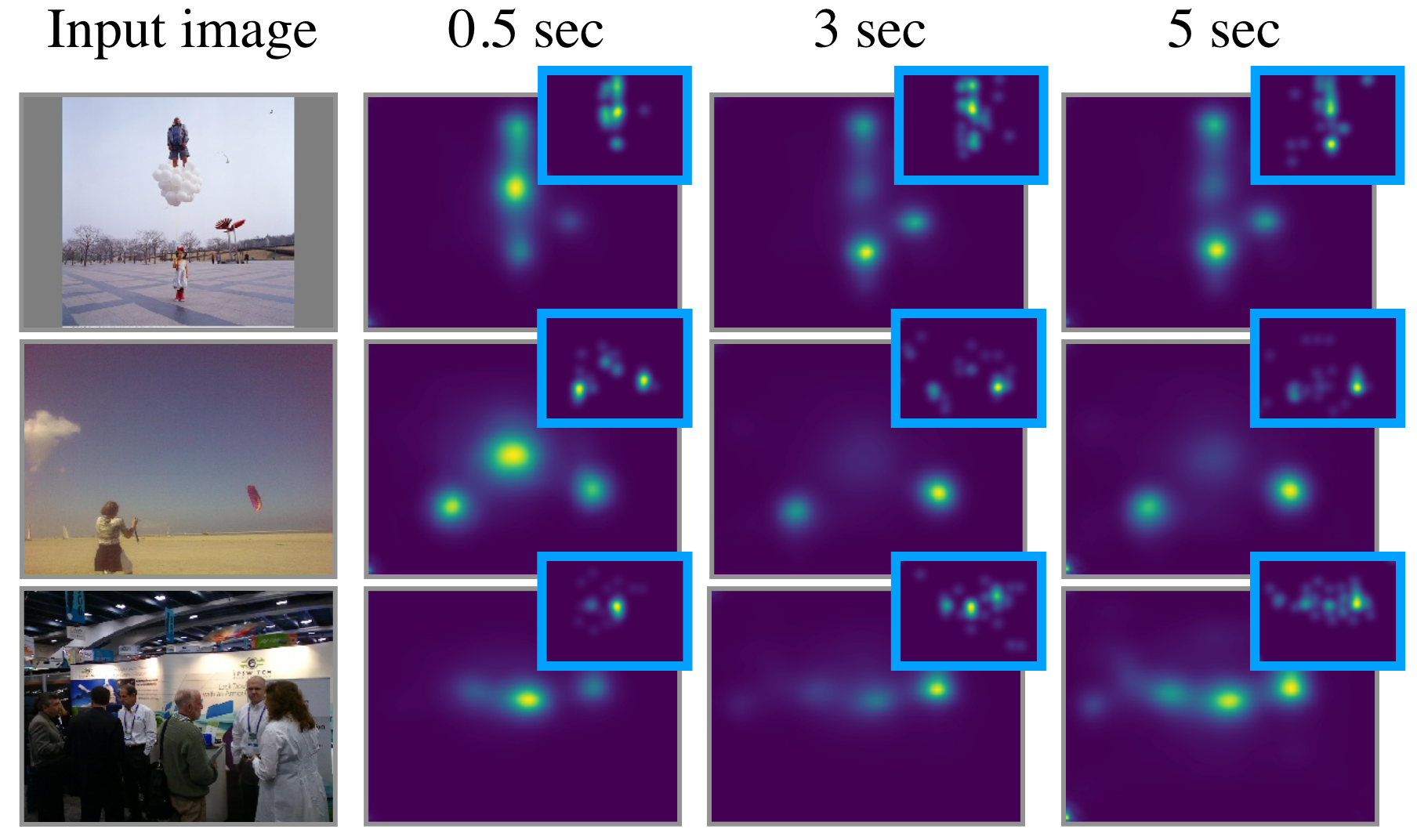

Example multi-duration saliency heatmaps from the CodeCharts1K dataset, featuring images taken from a variety of datasets.

Code/Models

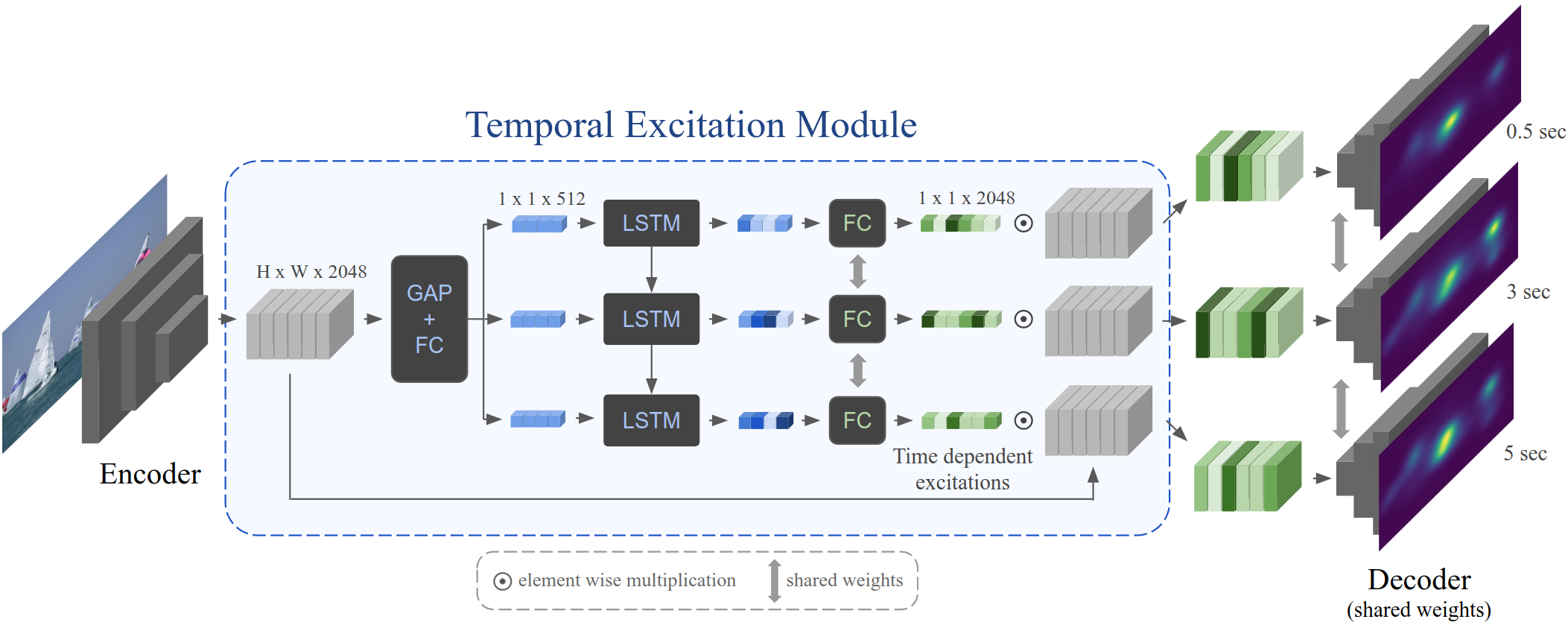

Architecture for our Multi-Duration Saliency Excited Model (MD-SEM).

Applications

Automatic crops based on multi-duration saliency predictions produced by our model.

Publicity

- 06/2020: We presented our paper at CVPR 2020 [teaser video] [poster]

- 06/2020: We presented our paper as a talk at VSS 2020 [talk recording]

- 06/2020: Our work was featured in an MIT News article

- 12/2019: We presented our work at the SVRHM workshop at NeurIPS 2019

Multi-Duration Saliency

Multi-Duration Saliency